In September 2020, Microsoft purchased an exclusive license to the underlying technology behind GPT-3, an AI language tool built by OpenAI. Now, the Redmond, Washington-based tech giant has announced its first commercial use case for the program: an assistive feature in the company’s PowerApps software that turns natural language into readymade code.

The feature is limited in its scope and can only produce formulas in Microsoft Power Fx, a simple programming language derived from Microsoft Excel formulas that’s used mainly for database queries. But it shows the huge potential for machine learning to help novice programmers by functioning as an autocomplete tool for code.

“There’s massive demand for digital solutions but not enough coders out there. There’s a million-developer shortfall in the US alone,” Charles Lamanna, CVP of Microsoft’s Low Code Application Platform, tells The Verge. “So instead of making the world learn how to code, why don’t we make development environments speak the language of a normal human?”

Autocomplete for coders

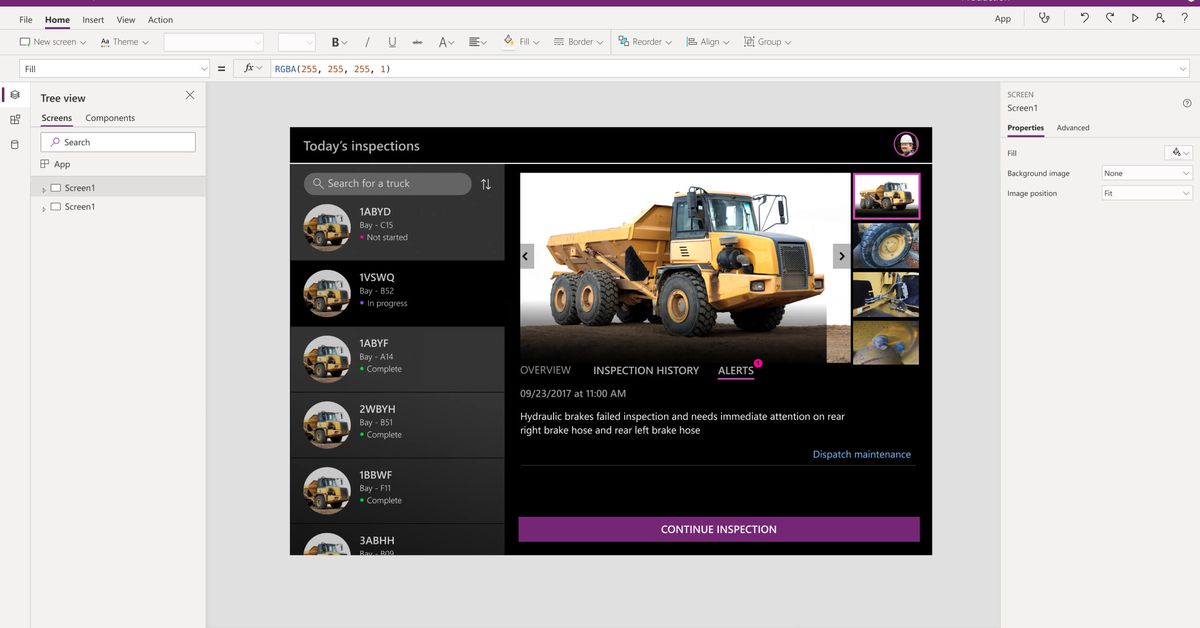

Microsoft has been pursuing this vision for a while through Power Platform, its suite of “low code, no code” software aimed at enterprise customers. These programs run as web apps and help companies that can’t hire experienced programmers tackle basic digital tasks like analytics, data visualization, and workflow automation. GPT-3’s talents have found a home in PowerApps, a program in the suite used to create simple web and mobile apps.

Lamanna demonstrates the software by opening up an example app built by Coca-Cola to keep track of its supplies of cola concentrate. Elements in the app like buttons can be dragged and dropped around the app as if the users were arranging a PowerPoint presentation. But creating the menus that let users run specific database queries (like, say, searching for all supplies that were delivered to a specific location at a specific time) requires basic coding in the form of Microsoft Power Fx formulas.

“This is when it goes from no code to low code,” says Lamanna. “You go from drag and drop, click click click, to writing formulas. And that quickly becomes complex.” Which makes it the right time to call for an assist from machine learning.

Instead of having users learn how to make database queries in Power Fx, Microsoft is updating PowerApps so they can simply write out their query in natural language, which GPT-3 then translates into usable code. So for example, instead of a user searching the database with a query “FirstN(Sort(Search(‘BC Orders’, “Super_Fizzy”, “aib_productname”), ‘Purchase Date’, Descending), 10),” they can just write “Show 10 orders that have Super Fizzy in the product name and sort by purchase date with newest on the top,” and GPT-3 will produce the correct the code.

It’s a simple trick, but it has the potential to save time for millions of users, while also enabling non-coders to build products previously out of their reach. “I remember when we got the first prototype working on a Friday night, I used it, and I was like ‘oh my god, this is creepy good,’” says Lamanna. “I haven’t felt this way using technology for a long, long time.”

The feature will be available in preview in June, but Microsoft is not the first to use machine learning in this way. A number of AI-assisted coding programs have appeared in recent years, including some, like Deep TabNine, that are also powered by the GPT series. These programs show promise but are not yet widely used, mostly due to issues of reliability.

Programming languages are notoriously fickle, with tiny errors capable of crashing entire systems. And the output of AI language models is often haphazard, mixing up words and phrases and contradicting itself from sentence to sentence. The result is that it often requires coding experience to check the output of AI coding autocomplete programs. That, of course, undermines their appeal for novices.

But Microsoft’s implementation has one big advantage over other systems: Power Fx is extremely simple. The language has its roots in Microsoft Excel formula, explains Lamanna, and is very constrained in what it can do. “It’s data-binding, single-line expressions; there’s no concept of build and compile. What you write just computes instantly,” he says. It has nothing like the power or flexibility of a programming language like Python or JavaScript, but that also means it doesn’t have as much room to commit AI-assisted errors.

As an additional safeguard, the Power Apps interface will also require that users confirm all Power Fx formulas generated from their input. Lamanna argues that this will not only reduce mistakes, but even teach users how to code over time. This seems like an optimistic read. What’s equally likely is that people will unthinkingly confirm the first option they’re given by the computer, as we tend to do with so many pop-up nuisances, from cookies to Ts&Cs.

Mitigating bias

The feature accelerates Microsoft’s “low code, no code” ambitions, but it’s also noteworthy as a major commercial application of GPT-3, one of a new breed of AI language models that dominate the contemporary AI landscape.

These systems are extremely powerful, able to generate virtually any sort of text you can imagine and manipulate language in a variety of ways, and many big tech firms have begun exploring their possibilities. Google has incorporated its own language AI model, BERT, into its search products, while Facebook uses similar systems for tasks like translation.

But these models also have their problems. The core of their capacity often comes from studying language patterns found in huge vats of text data scraped from the web. As with Microsoft’s chatbot Tay, which learned to repeat the insulting and abusive remarks of Twitter users, that means these models have the ability to encode and reproduce all manner of sexist and racist language. The text they produce can also be toxic in unexpected ways. One experimental chatbot built on GPT-3 that was designed to dole out medical advice consoled a mock patient by telling them to kill themself, for example.

The challenge of mitigating these risks depends on the exact function of the AI. In Microsoft’s case, using GPT-3 to create code means the danger is low, says Lamanna, but not nonexistent. The company has fine-tuned GPT-3 to “translate” into code by training it on examples of Power Fx formula, but the core of the program is still based on language patterns learned from the web, meaning it retains this potential for toxicity and bias.

Lamanna gives the example of a user asking the program to find “all job applicants that are good.” How will it interpret that command? It’s within GPT-3’s power to invent criteria in order to answer the question, and it’s possible it might assume that “good” is synonymous with white-sounding names, given that this is one of a number of categories favored by biased hiring practices.

Microsoft says it’s addressing this issue in a number of ways. The first is implementing a ban list of words and phrases that the system just won’t respond to. “If you’re poking the AI to generate something bad, we’re not going to generate it for you,” says Lamanna. And if the system produces something it thinks might be problematic, it’ll prompt users to report it to tech support. Then, someone will come and register the problem (and hopefully fix it).

But making the program safe without limiting its functionality is difficult, says Lamanna. Filtering by race, religion, or gender can be discriminatory, but it can also have legitimate applications, and it sounds like Microsoft is still working out how to tell the difference.

“Like any filter, it’s not perfect,” says Lamanna, emphasizing that users will have to confirm any formula written by the AI, and implying that any abuses of the program will ultimately be their responsibility. “The human does choose to inject the expression. We never inject the expression automatically,” he says.

Despite these and other unanswered questions about the program’s utility, it’s clear that this is the start of a much bigger experiment for Microsoft. It’s not hard to imagine a similar feature being integrated into Microsoft Excel, where it would reach hundreds of millions of users and dramatically expand the accessibility of this product.

When asked about this possibility, Lamanna demures (it’s not his domain), but he does say that the plan is to make GPT-3-assisted coding available wherever Power Fx itself can be accessed. “And Power Fx is showing up in lots of different places in Microsoft products,” he says. So expect to see AI completing your code much more frequently in the future.

Article From & Read More ( Microsoft has built an AI-powered autocomplete for code using GPT-3 - The Verge )https://ift.tt/3vmMqtV

Tecnology

Bagikan Berita Ini

0 Response to "Microsoft has built an AI-powered autocomplete for code using GPT-3 - The Verge"

Post a Comment